The Impact of Europe's AI Act on Customer Experience

On 13 March, the European Parliament passed the world’s first Artificial Intelligence regulation. Just as with privacy concerns, the European Union is once again demonstrating its leadership in important legislation. The AI Act aims to ensure Europeans can trust what AI has to offer. Trust is also one of the anchor points in MultiMinds’ vision on data-driven customer experiences. So, does the AI Act empower us to further our cause? Or does it provide additional loopholes for even the most well-meaning companies to jump through?

A world first

Trust is one of the key values in MultiMinds’ vision on data-driven customer experiences and a key element in our innovation strategy and research. Our goal is to provide organisations and customers with “Explainable AI”. So, does the AI Act empower us to further our cause? Or does it provide additional loopholes for even the most well-meaning companies to jump through?

At the World Economic Forum (WEF) in Davos in January 2024, Bill Gates emphasised that AI would significantly impact everyone's lives within the next five years, assuring that new opportunities would emerge. Gates specifically pointed out AI's capability to streamline tasks like paperwork for doctors and highlighted its accessibility through existing devices and internet connections. However, Bill Gates also pointed out the risks of AI. Examples such as election manipulation, fake news, and the potential of AI to surpass human intelligence, are significant concerns.

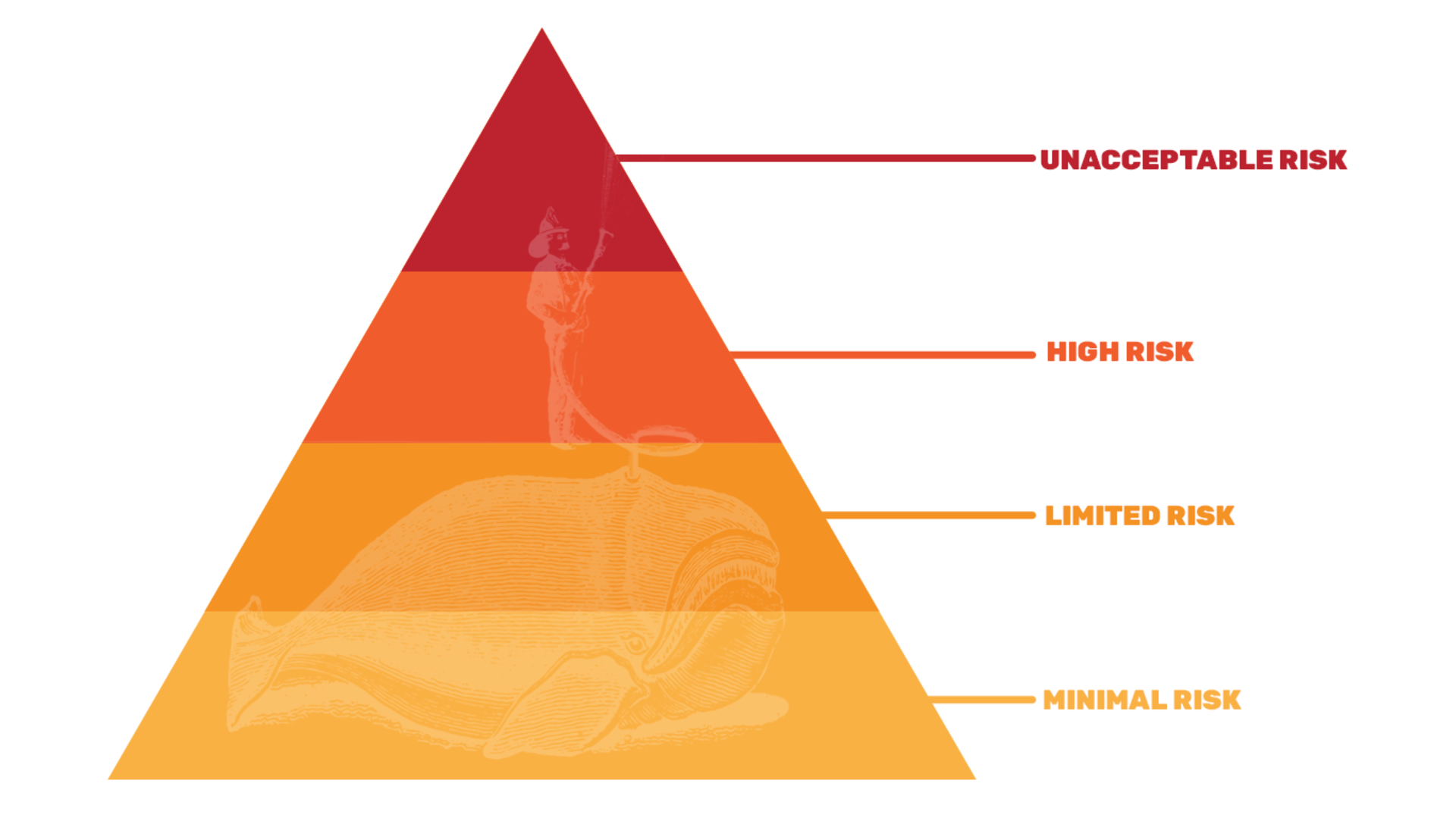

Let's talk about risk

Firstly, it’s important to determine where your AI use case sits in the AI Act’s risk framework. Four risk levels have been identified: ranging from unacceptable to minimal.

Minimal Risk

AI-powered solutions like spam filters or the use of AI in video games do not have any specific obligations.

Limited Risk

Applies to Generative AI and means that AI generated content should be labeled as such, to provide trust and transparency towards consumers. It also means when talking to a chatbot, humans should be made aware they are interacting with a machine.

High Risk

These AI systems are subject to a strict set of obligations before they are allowed to go to market in the European Union. Examples include driverless cars, exam scoring, CV-sorting for recruitment, automated evaluation of visa applications, and interpreting court rulings.

Unacceptable Risk

All AI which poses a clear threat to the safety, livelihoods and rights of people are banned. This encompasses use cases like social scoring, biometric categorisation or inferring emotions of a natural person in the workplace or at school.

What does this mean for me?

Now, most if not all of your use cases will probably fall under the “minimal” or “limited” risk category. But that doesn’t mean you don’t have any responsibility. The AI act assigns responsibilities to both the providers (developers) of AI solution and the organisations deploying it.

I am using Generative AI to create content for the articles I publish on my website and posts I do on social media. Do I need to disclose I used AI to create this content?

No: the AI act explicitly states an exception when the AI-generated content has undergone a process of human review or editorial control.

I have a chatbot on my website to help people find the right content and convert. Should I mention they are talking to an AI?

Yes: “humans should be made aware that they are interacting with a machine so they can take an informed decision to continue or step back” - AI Act

I am using AI in parts of my customer journey, for example as part of a Customer Data Platform. What should I do as a deployer?

Start a technical audit of your AI models today, whether you developed them in-house or purchased them as part of a solution. In which risk category does your solution fall? Do these models comply with your own values as an organisation?

I am looking to buy an AI solution or a larger package which includes some AI features. How should I evaluate it?

As part of your selection process, include criteria specific to the robustness of the AI solution: is the AI accurate, unbiased and fair? How does it deal with data privacy? How is the technology governed and monitored?

What is the link between GDPR and the AI Act?

The AI Act does not replace or supersede the General Data Protection Regulation. As part of your development or evaluation of AI models, you need to acquire the appropriate consent from your users to train or be used in AI. Furthermore, in the pursuit of unbiased and fair AI solution, any use of sensitive data according to GDPR (gender, race, political convictions, religious believes…) needs to be absolutely avoided or scrutinised as high risk AI applications.